2025

AI Resume Chat

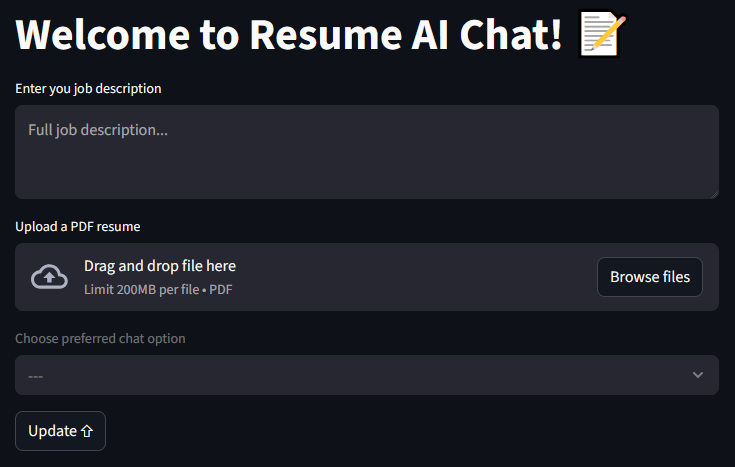

I made my own AI chat application to adjust my resume for every new job I apply for.

If, like me, you have been applying for jobs, tailoring your resume multiple times to better fit the job description can get tiring. But aren’t we still unsure how effective it really is? I’m worried what questions the interviewer will ask about on my resume.

In today’s crazy competitive job market, making a resume that stands out is important. Tools like Ollama and Langchain offer nice useful AI models and tools that can not only be used to enhance your resume but also to prepare you for interviews.

Large Language Models (LLMs)

These are tools with which you may have interacted with through ChatGPT, Gemini, Claude or whatever. To use these to update a resume to get a better job we have to instruct, or prompt, them carefully. For me, it is best to make the prompt by role, task, input, and output method.

Define the role it is supposed to mimic, define its task in detail, tell what sort of inputs it should expect, and how it should give the results.

I’ve decided to take this a step further and develop my own application for my resumes.

Setup

For this application, I use the Llama3 model from Ollama with python and Langchain. Basically, this application will do these things:

- Read the job description provided

- Read your PDF resume

- Answer your questions based on your job description and resume

- Suggest modifications to your resume

- Do a mock interview

Instead of only working through the terminal, which I often do, I integrated streamlit into the AI Resume Chat application for an easier user interface.

Usage

The first entry, the job description, will be a part of the system prompt itself. It is because all of the conversations will be dependent on it.

The second entry, a PDF resume, is the next part of the system prompt. RAG gets us the required snippets from the uploaded resume and, based on these, the application can extract relevant parts of the previous chat history which may be required. All of this is provided to the LLM which gives us its insights.

The third entry, a drop down, allows the selection of a chat option:

- Enhanced Resume

- Simulate Interview Both options have their own prompt, one to help enhance a resume and one to conduct an interview based on the job description and the provided resume.

After clicking the Update button the model is loaded with all of the necessary provided information. When the model is ready a new entry appears when the user can ask any questions like “How can I change my resume for this job description?”, “What is wrong with my resume?”, or “Hello! Let’s start the interview please.”

If you want to use it then please visit the GitHub repository and apply the directions in the README.md file. All of this assumes you have knowledge of using python.

Data Redaction

Using data redaction on all my repositories.

Data Redaction

The term for removing private information from code is data redaction. This technique involves masking or obscuring sensitive data within a document or dataset to protect personally identifiable information (PII) and other confidential data. Additionally, the process of hiding or removing sensitive information from documents is referred to as document redaction.

Some Traditional Methods

Let’s say we have a docker compose for a complex set of services or maybe a python program for running an AI model. We want to share the code but, we don’t want to share our local information like the internal servers being used, their ports, the website domains, user names, passwords, contact information, or more.

I won’t bother explaining docker compose yaml files and their .env and secret files but they are the best solutions. For docker compose one would use the .env and secret files that are then placed in the .gitignore file so they don’t get deployed. The .env, though in the .gitignore file, can be replaced with an uploadable example like ‘example.env’ so the one using the code can see what they need to provide. The same applies for the secret files, the secret file can have an uploadable replacement like ‘example.db_root_password.txt’. Documentation is very important.

For an application, like for python, one might use environment variables and document what they should look like so the one using the code can know what they need to complete. A simple script to launch the python application can include those environment variables like this but excluding the launching script using .gitignore…

#!/bin/bash

# File: launch.sh

GPU_BASE_URL=”MyPc1.local”

GPU_BASE_PORT=”5005”

python ./my-ai-script.py

# File: .gitignore

.env

secrets/

launch.sh

My Custom Cover-All Redaction Method

I personally have been using a custom bash script that just goes through every folder and file in the repository replacing anything private with something else. The whole redaction process can be undone using the same script. All the private information is kept in a ‘private-information.txt’ file that is never in any repository. This way all the private information is in one place and before any commit, using a git pre-commit hook, everything gets redacted.

#!/bin/bash

# File: .git/hooks/pre-commit

count=$(/scripts/search.sh | wc -l)

if [ "$count" -gt 0 ]; then

/scripts/replace.sh ../../

fi

The information used by search.sh and replace.sh is in the ‘private-information.txt’ file. All search and replace goes in the order of the longer string to be replaced down to the smaller string to be replaced so the longer one gets matched first.

One will notice that I am using an equal sign as a separator in this example. If one wants to use this script, just use a character not likely to be redacted like a whitespace or control character i.e. \r, \n, \v, etc

Here is an example of what the private-information.txt file would look like:

amigo.lan=MyPc1.local

hermano.lan=MyPc2.local

omigo=MyPc1

hermano=MyPc2

MySecretPassword1=<password>

MySecretPassword2=<Password>

MySecretPassword3=<Pwd>

MyUserName=<login>

me@mywebsite.net=user@domain.tld

contact@mywebsite.net=name@domain.tld

support@mywebsite.net=id@domain.tld

blog.mywebsite.net=sub.domain.tld

photos.mywebsite.net=immich.domain.tld

nc.mywebsite.net=nextcloud.domain.tld

mywebsite.net=domain.tld

Pretty simple, right? The whole point is to always cover anything that needs to be redacted with the ability to undo the redaction when needed. Let’s say one has all their repositories in one ‘repos’ folder. Every repository can then be kept redacted by running this script at any time.

What are these search and replace scripts I’m talking about? See ya in the next post!

Post: Search For Redacted Data

Data Redaction - Search

Searching for private and redacted information in my repositories.

Data Redaction Search Script

One of the things I like to do when it comes to data redaction for my repositories is to check for any private information. I have this bash script called ‘search.sh’ that will search the whole folder for any private information. The script can do the opposite as well, searching for redacted information.

Please take a look at Data Redaction for the ‘private-information.txt’ that will be used here.

Sample run of search.sh

# Bad run...

/scripts/search.sh

Usage: /scripts/search.sh [option] <directory>

Options:

-s | --swap Search for redacted

# Search for private information

/scripts/search.sh ./

# Search for redacted information

/scripts/search.sh -s ./

For this script, since my repositories are always the same layout I define some bash variables that are easy to update. Please excuse the bad naming; they’ve been there for years.

# SECRETS is the file containing patterns to search for

SECRETS="/private-information.txt"

# EXD is a comma-separated list of directories to exclude

EXD="--exclude-dir={venv,.git,.vscode}"

# EXEXT is a comma-separated list of file extensions to exclude

EXEXT="--exclude=*.{svg,webp,png,jpg,pdf,docx,gz,zip,tar}"

# TEMPFILE is where to store the altered private information

TEMPFILE="/tmpfs/temp-patterns.txt"

Next, the script loads all the lines for the ‘private-information.txt’ file and puts them in the TEMPFILE location.

# Read in all the private information and put it all in the lines array

mapfile -t lines < $SECRETS

# For each line in the lines array we are going to create the actual

# file that will be used for searching later.

for line in "${lines[@]}"; do

# In the example 'private-information.txt' each private and redacted

# are separated by an equal sign

IFS='=' read -r -a array <<< "$line"

# Swap decides if we will use redacted or private

if $swap; then

echo "${array[1]}"

else

echo "${array[0]}"

fi

done > $TEMPFILE

SECRETS="$TEMPFILE"

Now, we just run the grep command and see what is in there…

# There were problems with string interpolation so the script uses eval on a string

# $folder is the script parameter for the folder to search

command="grep $EXD $EXEXT -RiIFn -f $SECRETS $folder"

eval $command

The end result of running this script is either nothing or the file name, line number, and the text that matches the private or redacted information being searched for. As for replacing private with redacted; see ya in the next post!

Post: Replacing Redacted Data

Data Redaction - Replace

Replacing private or redacted information in my repositories.

Data Redaction Replace Script

It’s time to take a look at the script that will replace the private information with the redacted information. The same script can undo that switch of information making it very convenient when storing code on a remote repository without making it private. This bash script is called ‘replace.sh’ and it will search the whole folder for any private information replacing it with the redacted version.

Please take a look at Data Redaction for the ‘private-information.txt’ that will be used here.

Sample run of search.sh

# Bad run...

/scripts/replace.sh

Usage: /scripts/replace.sh [option] <directory>

Options:

-s | --swap Replace redacted with private

# Replace for private information with a redacted version

/scripts/replace.sh ./

# Replace the redacted version with original private information

/scripts/replace.sh -s ./

For this script, I’m adding a bash variable while the others are just like in search.sh. They are easy to update this way. Again, please excuse the bad naming; they’ve been there for years.

# SECRETS is the file containing patterns to search for

SECRETS="/private-information.txt"

# EXD is a comma-separated list of directories to exclude

EXD="--exclude-dir={venv,.git,.vscode}"

# EXEXT is a comma-separated list of file extensions to exclude

EXEXT="--exclude=*.{svg,webp,png,jpg,pdf,docx,gz,zip,tar}"

# SED_SCRIPT is the temporary sed script file

SED_SCRIPT="/tmpfs/script.sed"

This script uses the find command so we need to add the parameters to exclude the chosen directories and file extensions. I think it makes things easier to store the directories and file extensions to exclude in variables and then just generate what is needed for the find command.

This find command will be used like this…

find ./folder -type f \( ! -iname ".png" ! -iname ".zip" \) ! -path "./venv" ! -path "./.git" -print

To generate the directories to be excluded the following script is used:

# Build the exclude paths

path=""

IFS=',' read -r -a fld <<< "$EXD"

for d in "${fld[@]}"; do

path="$path ! -path ""./${d}/*"""

done

To generate the file extensions to be excluded the following script is then used:

# Build the exclude extensions

extension="\( "

IFS=',' read -r -a ext <<< "$EXEXT"

for e in "${ext[@]}"; do

extension="$extension ! -iname "".${e}"""

done

extension+=" \)"

This script also is going to use the sed command. Using the sed script file is best in this situation but the file has to be generated. The sed command will make changes directly to the target file while making a backup before making that change.

The sed command will be used like this…

# SED script file is, per line, like this...

# s/SEARCH/REPLACE/g

sed --in-place=.bak --file=/tmpfs/script.sed this-file.txt

To generate the SED script file we have to read the ‘private-information.txt’ and transform it taking into consideration the swap parameter. SED does not do non-regex substitutions so there will be escaping added for both private and redacted.

# Build the sed script from the secrets file

mapfile -t lines < $SECRETS

for line in "${lines[@]}"; do

IFS='=' read -r -a array <<< "$line"

# Swapped or not all entries must be escaped

if $swap; then

escaped_lhs="${array[1]//[][\\\/.^\$*]/\\&}"

escaped_rhs="${array[0]//[\\\/&$'\n']/\\&}"

else

escaped_lhs="${array[0]//[][\\\/.^\$*]/\\&}"

escaped_rhs="${array[1]//[\\\/&$'\n']/\\&}"

fi

escaped_rhs="${escaped_rhs%\\$'\n'}"

echo "s/${escaped_lhs}/${escaped_rhs}/g"

done > $SED_SCRIPT

Next, we just need to get the list of files that can be modified…

# Find files, filter for ASCII, and create an array of files

command="find $folder -type f $extension $path -print"

results=$(eval $command | xargs file | grep ASCII | cut -d: -f1)

readarray -t files <<< "$results"

With the list of files that can be modified we will now apply the SED Script file to each of them.

for file in "${files[@]}"; do

sed --in-place=.bku --file="$SED_SCRIPT" "$file"

# Check if the file was actually modified by sed

if ! cmp -s "$file" "$file.bak"; then

# State which file was modified by sed

echo "Modified $file"

fi

# Remove the backup copies

rm "$file.bak"

done

And that is it. I put both search.sh and replace.sh in a git pre-commit hook. These files only need to be changed when there is a new type of folder or file type to ignore when replacing private and redacted information.

2024

Retry Action

Let’s retry an action and maybe a function…

Keeping in mind, this particular job had a specific rule of not allowing any third-party libraries. Everything had to be done adhoc.

The Action Keeps Failing Challenge

While working with the team, I often found that tasks would fail due to other problems outside our domain. Often this was due to the SQL server timing out which we couldn’t change. There were sometimes problems where service we were relying on was slow starting up; all of our services would sleep after a mere 5 minutes.

We could just keep popping up the error notification and make the user resubmit but even I didn’t like doing that myself. I came up with an idea for going ahead and retrying until there was a definite point to give up.

A Retry Function

/// <summary>

/// Retry a failed action

/// </summary>

/// <param name="action">Action to perform</param>

/// <param name="numberOfRetries">Number of retries</param>

/// <param name="delayMs">Delay between reties. Default is no delay.</param>

public static void RetryAction(Action action, int numberOfRetries, int delayMs = 0)

{

Exception? exception = null;

int retries = 0;

while (retries < numberOfRetries)

{

try

{

action();

return;

}

catch (Exception ex)

{

// Ignore error

exception = ex;

retries++;

}

if (delayMs > 0)

{

Task.Delay(delayMs).Wait();

}

}

throw exception!;

}

Example

public static void ThisMightFail()

{

const int notAllowed = 1;

Console.Write("Enter a number: ");

var input = int.Parse(Console.ReadLine() ?? "0");

if (input == notAllowed)

{

Console.WriteLine($"You number must not be {notAllowed}");

throw new ArgumentException($"You number must not be {notAllowed}");

}

Console.Write("Number accepted!");

}

RetryAction(ThisMightFail /* The action to retry */,

5 /* 5 retries */,

500 /* 1/2 second delay */);

What It Looks Like If It Fails

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 1

Message: Your number must not be 1

Source: RetryActionDemo

HelpLink:

StackTrace: at RetryActionDemo.Program.Main(String[] args) in ....

What It Looks Like If It Succeeds

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 1

Your number must not be 1

Enter a number: 2

Number accepted!

Okay, what about parameters and return values…

public static int GetTheNumber(int notAllowed = 1)

{

Console.Write("Enter a number: ");

var input = int.Parse(Console.ReadLine() ?? "0");

if (input == notAllowed)

{

Console.WriteLine($"You number must not be {notAllowed}");

throw new ArgumentException($"You number must not be {notAllowed}");

}

Console.Write("Number accepted!");

return input;

}

/* This is a wrapper so we can pass a parameter */

public static int ThisMightFail2()

{

return GetTheNumber(3);

}

var res = RetryAction(ThisMightFail2 /* The action to retry */,

5 /* 5 retries */,

500 /* 1/2 second delay */);

Console.WriteLine(res);

The New RetryAction

/// <summary>

/// Retry a failed function

/// </summary>

/// <param name="fn">Function to perform</param>

/// <param name="numberOfRetries">Number of retries</param>

/// <param name="delayMs">Delay between reties. Default is no delay.</param>

public static TResult? RetryAction<TResult>(Func<TResult> fn, int numberOfRetries, int delayMs = 0)

{

Exception? exception = null;

int retries = 0;

while (retries < numberOfRetries)

{

try

{

return fn();

}

catch (Exception ex)

{

// Ignore error

exception = ex;

retries++;

}

if (delayMs > 0)

{

Task.Delay(delayMs).Wait();

}

}

throw exception!;

}

What It Looks Like If It Fails

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 3

Message: Your number must not be 3

Source: RetryActionDemo

HelpLink:

StackTrace: at RetryActionDemo.Program.Main(String[] args) in ....

What It Looks Like If It Succeeds

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 3

Your number must not be 3

Enter a number: 1

Number accepted!

1

Using A Git Branch In MSBuild

Project Deployment Challenge

I’m just sharing experiences

I’ve worked with many teams and my role was mostly as a helper and problem solver. This is what I actually enjoy about my work. Well, that and ETL.

In a new job, I discovered that there was no indication on the server as to what stage of deployment the project was in. Yes, each deployment went to a different server based on the git branch but there was nothing on the server saying what the environment was. This meant I couldn’t use the typical variety of AppSettings configurations. I found that even web.config was blocked on deployment. I asked if we could fix this and the CI/CD pipeline manager said he had no idea how to do that or why it would even be needed. He even advised just modifying the program.cs for each deployment.

Note

IIS servers do allow configuration of environment variables. An environment variable can also be configured using web.config if it isn’t blocked on the server.

Why is this environment variable important?

So, why would this matter? Well, environment variables, like EnvironmentName, can decide what the server environment is going to be like. This can also be used to determine what actions to take in that environment or even provide secret information only the server admin knows.

Like many already do, we should have had different AppSettings configurations to configure each application for it’s current environment. The AppSettings configuration can include things like the application version, what database to use for that stage, where to send the “error” warning email to, where to send the automated application emails, who is to be the initial administrator, where to store files locally, etc. Basically, up until I found a workaround, we had put everything into one appsettings.json for all stages and the project.cs code had to be modified for each deployment. What could go wrong with that?

My AppSettings suggestions were based on Microsoft’s Learning website…

-

appsettings.development.json - A developers environment would be radically different as the developer would be using a different database (locally), they might want to disable the application emails being sent out, who the application admin was (the developer obviously), where to store the local files, and more.

-

appsettings.stage.json – The stage environment would be nothing like the developers environment but it should work just like the producation environment. It would of course have it’s own database, some emails might be different, and storing the files would be in a different place on that server.

-

appsettings.production.json – The production environment would be like the stage environment only far more stable. The application server and the database servers should have been faster and more stable as well. Experiments should not have been happening in this environment because they would throw the user’s into appropriate tantrums.

One of these AppSettings versions would be chosen based on the EnvironmentName environment variable.

My project hack

I made a fun change to each of the projects so the proper AppSettings configuration would be used on deployment. It did take the team a while to adjust but the code got a lot cleaner and the deployments were more stable.

Here is the MSBuild modification I made:

Something.csproj

<PropertyGroup>

<GitBranch>

$([System.IO.File]::ReadAllText('$(MSBuildThisFileDirectory)..\..\.git\HEAD').Replace('ref: refs/heads/', '').Trim())

</GitBranch>

</PropertyGroup>

<Choose>

<WhenCondition="'$(GitBranch)'=='main'And'$(Configuration)'=='Release'">

<PropertyGroup>

<EnvironmentName>Production</EnvironmentName>

</PropertyGroup>

</WhenCondition=>

<WhenCondition="'$(GitBranch)'=='develop'And'$(Configuration)'=='Release'">

<PropertyGroup>

<EnvironmentName>Staging</EnvironmentName>

</PropertyGroup>

</WhenCondition=>

<Otherwise>

<PropertyGroup>

<EnvironmentName>Development</EnvironmentName>

</PropertyGroup>

</Otherwise>

</Choose>

What is happening here is that, in GitBranch, I’m having MSBuild look for the .git folder and then read the HEAD file. Using the String.Replace() function, ‘ref: refs/heads/’ is removed leaving just the branch name. The branch name is trimmed and then stored in the GitBranch custom property.

Choose is a feature MSBuild supports in csproj files and it lets the developer make a choice based on the property being used. In this project, ‘main’ was used for production, ‘develop’ was used for stage, and thus anything not ‘Release’ would be a ‘Development’ environment. The WhenCondition simply evaluates GitBranch and Configuration as strings and compares them to the conditions that should be met. When chosen, the EnvironmentName environment variable gets set. It is basically like a C# switch statement or expression.

Environment.SetEnvironmentVariable("EnvironmentName",

(GitBranch, Configuration) switch

{

("main", "Release") => "Production",

("develop", "Release") => "Staging",

_ => "Development"

});

}

While the rest of the team were getting paid more than I was, I admit that I was hired to help them fix problems and help migrate old applications. I love helping and I’m not criticizing the team here, though it might sound like it. I like helping a team that listens.

2022

Namecheap DDNS

Keeping my NameCheap DDNS IPs updated.

NameCheap

Some of my domain names are registered with NameCheap. Like my other registrars, I often use DDNS but NameCheap is a lot more difficult to keep the IP addresses up to date. Rather than use their provided application I made my own a long time ago because it was a fun challenge but I’ve decided to share my DDNS IP address updating script.

As a note, I also have been using an Asus router for many years now. They provide their own DDNS service with their own provider domain named ‘asuscomm.com’. It does come with it’s own SSL certificate also. You get to choose your own subdomain. As an example, mine could be ‘asus-ddns-subdomain’ giving me a final url like ‘asus-ddns-subdomain.asuscomm.com’. My ‘asus-ddns-subdomain.asuscomm.com’ url has the IP address assigned by my internet provider and Asus keeps it up to date. This is so very useful in a huge variety of ways which is one of several reasons I still use an Asus router.

DDNS.sh Script

The script I’m using is named ddns.sh and I keep it in my docker folder at /svr/docker so it is easy to find and edit when needed. This script will require the use of CRON but it will mostly only be run very quickly without sending any data back to NameCheap unless it becomes necessary.

Disable Echo

First I wanted to be able to turn echo off when it isn’t needed. I’m using the environment variable CRON as a flag to turn echo off. For echo, I’m using a variable that starts with the echo command and, if the CRON environment variable is there, the variable changes to “:” which just means “don’t do anything”.

echo="echo"

if [ ! -z "$CRON" ]; then

echo=":"

fi

Domains and Subdomains

The next step is to create two arrays, one for the root domains and the other for the subdomains. This has actually made it pretty easy to add new domains or subdomains and the CRON job doesn’t even have to be restarted.

Each array index is the root domain. On NameCheap, every domain has it’s own API Access Key for updating the IP address which is why the need for them to be included here.

Every subdomain, indexed by their own root domain, is also provided here in the script. For NameCheap, the subdomains don’t know the root domain’s IP address so they need to be set individually. Each subdomain here is separated by a ‘|’.

Please note, every subdomains element starts with ‘@’. This is the reference to the root domain itself. If you are using this script and have a domain that doesn’t have any subdomains, like ‘domain6.tld’, then you still need to provide the API key, of course, and the subdomains entry must, at the very least, have the ‘@’ listed and, in this case, no ‘|’ will be needed.

declare -A domains

declare -A subdomains

domains["domain4.tld"]="random-namecheap-api-key-one"

domains["domain5.tld"]="random-namecheap-api-key-two"

domains["domain3.tld"]="random-namecheap-api-key-three"

domains["domain6.tld"]="random-namecheap-api-key-four"

subdomains["domain4.tld"]="@|www.|immich.|nextcloud.|collabora."

subdomains["domain5.tld"]="@|www."

subdomains["domain3.tld"]="@|www."

subdomains["domain6.tld"]="@"

IP Address Found

From here, the script needs to know what the currently assigned IP address is. Well, the script will be using the Asus provided url. The host command gives us a break down of the url provided. Grep find the ‘has address’ part. Awk extracts the IP address. The IP address is then assigned to the local_ip variable.

ddns="asus-ddns-subdomain.asuscomm.com"

local_ip=$(host -t a $ddns | grep "has address" | awk '{print $4}')

$echo "Local IP: $local_ip"

Iterating Over The Domains

We need to report the new IP address to NameCheap for every root domain. The ‘!’ is saying to return the keys of each element of the array. The script will go through every root domain like this…

for domain in "${!domains[@]}"; do

...

done

Iterating Over Subdomains

For each root domain we also need to report the new IP address to NameCheap for every subdomain. The script continues by getting the subdomains and splitting them up into an array called ‘hosts’ using the bash ‘declare -a’ operation. The script can then iterate over the subdomains even if it is just an ‘@’.

for domain in "${!domains[@]}"; do

str=${subdomains[$domain]}

IFS='|'

declare -a hosts=($str)

for host in "${hosts[@]}"; do

...

done

done

Authoritative Name Servers

The script needs to determine the authoritative name server for each subdomain as we will be asking that name server what the current IP address is for that subdomain.

Here, if the subdomain, represented as host, is empty then it will be given and ‘@’ just in case. The actual host command is used to find the name server for the subdomain. The result has a lot of information so ‘grep’ looks for the name server part. Just in case there is more than one name server listed the head command gets the first one. The awk command then returns the name server’s name.

hst="${host//@/}"

authoritative_nameservers=$(host -t ns $hst$domain | grep "name server" | head -n1 | awk '{print $4}')

$echo "Authoritative nameserver for $hst$domain: $authoritative_nameservers"

Subdomain IP Address

This seems silly to do things this way when we could just use something like ‘host -t a $hst$domain’ but, in this situation, it is best to get the IP address from the authoritative name server. The dig command is the best way I could find at the time for this purpose.

resolved_ip=$(dig +short @$authoritative_nameservers $hst$domain)

$echo "Resolved IP for $hst$domain: $resolved_ip"

Do We Need To Anything?

At this point, we can compare the resolved IP address with the local IP address to determine if anything has changed. Regardless of the solution, the subdomain and domain loops continue to their end.

if [ "$resolved_ip" = "$local_ip" ]; then

$echo "$domain records are up to date!"

else

...

fi

Update The NameCheap DDNS IP Address

If the resolved IP address does not match the local IP address then it is time to tell NameCheap DDNS that the IP address has changed for the current subdomain.

This is done by using their DDNS update API via the curl command. We provide the subdomain, or just an ‘@’ if needed, the root domain, and the “password” that is actually the API Access Key.

response=$(curl -s "https://dynamicdns.park-your-domain.com/update?host=${host//./}&domain=$domain&password=${domains[$domain]}")

Checking the response from the NameCheap API, after trying to update the IP address, for errors is a big help. This is best run independently outside of CRON so you can see any error message.

err_count=$(grep -oP "<ErrCount>\K.*(?=</ErrCount>)" <<<"$response")

err=$(grep -oP "<Err1>\K.*(?=</Err1>)" <<<"$response")

if [ "$err_count" = "0" ]; then

$echo "API call successful! DNS propagation may take a few minutes..."

else

echo "API call failed! Reason: $err"

fi

CRON for DDNS.sh Script

CRON will run the script at short intervals watching for my internet service provider changing my IP address since they don’t even tell me when it happens. I’m going to assume you know how to use CRON.

Running ‘crontab -e’ will prompt you to choose your editor if you haven’t used it before.

Add the following line at the end of the crontab list of tasks. The task runs the ddns.sh script every 10 minutes from the top of the hour. You are free to adjust that timing as you want. I’ve seen people using 5 minute intervals, 1 hour intervals, and others. Including ‘CRON=running’, as described above, adds the CRON environment variable to the script telling it to not use echo.

0,10,20,30,40,50 * * * * CRON=running /svc/docker/ddns.sh

2021

JSON Schema

JSON Standards

Most people are not aware that there are standards for JSON – or lots of other things in the software development world. Let’s have a look; if you are a learner this will push you forward…

JSON stands for "JavaScript Object Notation", a simple data interchange format. There are lots of these data interchange formats but JSON is great for web development due to its strong ties to Javascript and, of course, Java.

JSON Structure

JSON supports the following data structures but they all have different interpretations in various programming languages:

-

object:

{ ‘key1’: ‘value1’, ‘key2’: ‘value2’ }

-

array:

[ ‘first’, ‘second’, ‘third’ ]

-

number:

42

3.1415926

-

string:

‘This is a string’

-

boolean:

true

false

-

null:

null

What some people might expect with JSON:

{

'name': 'John Doe',

'birthday': 'February 22, 1978',

'address': 'Richmond, Virginia, United States'

}

What JSON should look like:

{

'first_name': 'John',

'last_name': 'Doe',

'birthday': '1978-02-22',

'address': {

'home': true,

'street_address': '2020 Richmond Blvd.',

'city': 'Richmond',

'state': 'Virginia',

'country': 'United States'

}

}

One of the JSON sample representations is better than the other. The first is quick and dirty while the second has a thought out structure. A schema makes the second one even better and easier to interpret when one could need to support multiple consumers or using various programming languages.

This is an example of the JSON Schema for the second JSON sample. Note how it is still JSON also:

{

'$id': 'https://yoursite.com/person.schema.json',

'$schema': 'https://json-schema.org/draft/2020-12/schema',

'title': 'Person',

'type': 'object',

'properties': {

'first_name': { 'type': 'string' },

'last_name': { 'type': 'string' },

'birthday': { 'type': 'string', 'format': 'date' },

'address': {

'type': 'object',

'properties': {

'home': {'type': 'boolean' }

'street_address': { 'type': 'string' },

'city': { 'type': 'string' },

'state': { 'type': 'string' },

'country': { 'type' : 'string' }

}

}

}

}

Declaring a JSON Schema

A schema needs to be shared. It is a way of saying "This is what we expect from our clients and what we will deliver." Doesn’t this make life simpler rather than guessing around until something works?

Is this something new? Nope, even XML had schema. Do you remember XSL? There was a reason for that. Every programming language out there has a "schema" as well.

In the above example JSON schema one should have noticed the "type" keyword. A JSON property can often be misinterpreted. "Is it a number? A string? An object? What do I provide?", would be some common questions.

In the schema, "type" specifies the JSON type that is accepted. Sometimes a producer / consumer can handle multiple types but we need to be specific.

{ 'type': 'number' }

Accepts number only.

{ 'type': [number, string] }

Accepts number or string.

{ 'type': 'integer' }Accepts integer number only.

Additional attributes can accompany a "type". For example, one could add ‘description’, ‘minimum’, ‘maximum’, ‘minLength’, ‘maxLength’, ‘pattern’, ‘format’ and more properties. Just stick to the standards one makes.

The JSON schema also includes annotations like ‘$schema’ (points to the type of schema being used), ‘title’, ‘description’, ‘default’, ‘examples’, ‘$comment’, ‘enum’, ‘const’, ‘required’ and many more.

Non-JSON Data

To include non-JSON data one can also make use of the schema to clarify what is being passed around by using annotations like ‘contentMediaType’ and ‘contentEncoding’.

As an example, the proposed schema:

{

'type': 'object',

'properties': {

'contentEncoding': { 'type': 'string' },

'contentMediaType': { 'type': 'string' },

'data': { 'type': 'string' }

}

}

The sample data:

{

'contentEncoding': 'base64',

'contentMediaType': 'image/png',

'data': 'iVBORw0KGgoAAAANSUhEUgAAABgAAAA...'

}

References

For more details: https://json-schema.org

Specification: https://json-schema.org/specification.html

If you need help making a JSON schema: https://jsonschema.net/

2020

SOLID

SOLID is an acronym for the first five object-oriented design (OOD) principles by Robert C. Martin

- What is SOLID?

- Single Responsibility Principle

- Open / Closed Principle

- Liskov Substitution Principle

- Interface Segregation Principle

- Dependency Inversion Principle

What is SOLID?

SOLID is an acronym for the 5 basic principles of good software architecture:

| Letter | Abbr | Name | Basic Description |

|---|---|---|---|

| S | SRP | Single Responsibility Principle | A class should have only a single responsibility, that is, it only has 1 specific task to fulfill. |

| O | OCP | Open / Closed Principle | A class that needs a new behavior should be extended and not modified. |

| L | LSP | Liskov Substitution Principle | A class should be replaceable by a subclass that can change how a task is completed but it doesn’t damage the operation of the dependent classes. In other words, a parent class should be able to refer to child objects seamlessly during runtime via polymorphism. |

| I | ISP | Interface Segregation Principle | Instead of one huge multi-purpose interface one should have multiple specific interfaces. A client should not be forced to code for an interface they do not need. |

| D | DIP | Dependency Inversion Principle | Implementation should depend on abstraction rather than some concrete solution. High level objects should not have to depend on low level objects; they only need to know the feature is implemented by something. |

Polymorphism allows the expression of some sort of contract, with potentially many types implementing that contract (whether through class inheritance or not) in different ways, each according to their own purpose. Code using that contract should not have to care about which implementation is involved, only that the contract will be obeyed.

Single Responsibility Principle

SRP keeps classes simple, easy to maintain and minifies the effects of any minor changes. A class should only have one responsibility and not multiple. This can sound confusing at first; someone will likely bring up something like a view model or controller but that, in itself, has a single responsibility to manage other classes that themselves have a very simple single responsibility.

Broken - Writing to logfile is not job of Aggregator class. ___

class Aggregator

{

public void Add(Entity entity)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

System.IO.File.WriteAllText(@"c:\Error.txt", ex.ToString());

}

}

}

Fixed ___

class Aggregator

{

private Logger log = new Logger();

public void Add(Entity entity)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

log.Error(ex.ToString());

}

}

}

class Logger

{

public void Error(string error)

{

System.IO.File.WriteAllText(@"c:\Error.txt", error);

}

}

Open / Closed Principle

Again, OCP keeps classes simple, easy to maintain and minifies the impacts of changes to the code. OCP means that the class should not be modified (i.e. it is closed) but it can be extended (i.e. it is open). A developer will often say, “Hey, I need to add this new capability to this class for this specific case.” That modification can be reckless. One should keep the original class intact so the code relying on that original class isn’t broken. To add that new functionality the class basically has a new task and thus needs to change; that change should be an extension via inheritance.

Broken ___

class Drone

{

public int DroneType {get; set; }

public DateTime ArrivalTime(double kilometers)

{

if (DroneType == 0)

{

return DateTime.Now.AddHours(kilometers);

}

else if (DroneType == 1)

{

return DateTime.Now.AddHours(kilometers / 2.0);

}

else if (DroneType == 2)

{

return DateTime.Now.AddHours(kilometers / 32.0);

}

}

}

Fixed (Polymorphism Inheritance) ___

class Drone

{

public virtual DateTime ArrivalTime(double kilometers)

{

return DateTime.Now.AddHours(kilometers);

}

}

class SpyDrone : Drone

{

public override DateTime ArrivalTime(double kilometers)

{

return base.ArrivalTime(kilometers / 2.0);

}

}

class AttackDrone : Drone

{

public override DateTime ArrivalTime(double kilometers)

{

return base.ArrivalTime(kilometers / 32.0);

}

}

Liskov Substitution Principle

LSP keeps the overall program working as it was designed though a backing class might have been swapped out for another implementation. A good example of LSP in use is the Mock object often used in Unit Testing. The LSP object can be based off of interfaces or an abstract class as long as the object instance doesn’t break the calling code.

Example - Substituting a logger class doesn’t break Aggregator ___

class Aggregator

{

// private ILogger log = new FileLogger();

private ILogger log = new ConsoleLogger();

public void Add(Entity entity)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

log.Error(ex.ToString());

}

}

}

interface ILogger

{

void Error(string message);

}

class FileLogger : ILogger

{

public void Error(string error)

{

System.IO.File.WriteAllText(@"c:\Error.txt", error);

}

}

class ConsoleLogger : ILogger

{

public void Error(string error)

{

System.Console.Error.WriteLine(error);

}

}

Interface Segregation Principle

ISP helps protect clients from developers damaging interfaces which are normally “contracts” with other clients. Interfaces used by other clients, including actual people or classes throughout your code repositories, should not change because that change could break the client’s software implementation. There is also the tendency to put too much stuff into an interface which can almost be seen as the same as violating the Single Responsibility Principle though from an interface perspective. Requirements matter and sometimes it is a good idea to break up a proposed interface to provide more flexibility.

Original ___

interface IDatabase

{

void Add(Entity entity);

}

class Client: IDatabase

{

public void Add(Entity entity)

{

// Add entity to database.

}

}

Broken ___

interface IDatabase

{

void Add(Entity entity);

Entity Read();

}

// Broken!

class Client: IDatabase

{

public void Add(Entity entity)

{

// Add entity to database.

}

}

Fixed ___

interface IDatabase

{

void Add(Entity entity);

}

interface IDatabaseV1 : IDatabase

{

// Has Add Method.

Entity Read();

}

class ClientWithRead: IDatabaseV1, IDatabase

{

public void Add(Entity entity)

{

Client obj = new Client();

obj.Add(entity);

}

public Entity Read()

{

// Read from database.

}

}

class Client: IDatabase

{

public void Add(Entity entity)

{

// Add entity to database.

}

}

// Results...

IDatabase a = new Client(); // Previous clients happy!

a.Add(entity);

IDatabaseV1 b = new ClientWithRead(); // New client requirements.

b.Add(entity);

entity = b.Read();

Dependency Inversion Principle

Instead of hard coding a class to determine what component should be used internally flexibility should be built in. With DIP we want to invert, or delegate, the responsibility of that swappable component used internally. As an example, instead of using a bunch of IF statements to choose your logging mechanism (that’s a no), or some flag in your logging class (that’s a no) or just becoming inflexible and not allowing for this kind of change one should use Dependency Inversion.

Broke 1 ___

class Aggregator

{

private Logger log = new Logger();

public void Add(Entity entity, bool logToFile = true)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

log.LogToFile = logToFile;

log.Error(ex.ToString());

}

}

}

class Logger

{

public bool LogToFile { get; set; } = true;

public void Error(string error)

{

if (LogToFile)

{

System.IO.File.WriteAllText(@"c:\Error.txt", error);

}

else

{

System.Console.Error.WriteLine(error);

}

}

}

Broke 2 ___

class Aggregator

{

private ILogger log1 = new FileLogger();

private ILogger log2 = new ConsoleLogger();

public void Add(Entity entity, bool logToFile = true)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

if (logToFile)

log1.Error(ex.ToString());

else

log2.Error(ex.ToString());

}

}

}

interface ILogger

{

void Error(string message);

}

class FileLogger : ILogger

{

public void Error(string error)

{

System.IO.File.WriteAllText(@"c:\Error.txt", error);

}

}

class ConsoleLogger : ILogger

{

public void Error(string error)

{

System.Console.Error.WriteLine(error);

}

}

Fixed ___

class Aggregator

{

private ILogger log;

public Aggregator(ILogger logger)

{

log = logger;

}

public void Add(Entity entity, bool logToFile = true)

{

try

{

// Add entity to aggregator.

}

catch (Exception ex)

{

log?.Error(ex.ToString());

}

}

}

interface ILogger

{

void Error(string message);

}

class FileLogger : ILogger

{

public void Error(string error)

{

System.IO.File.WriteAllText(@"c:\Error.txt", error);

}

}

class ConsoleLogger : ILogger

{

public void Error(string error)

{

System.Console.Error.WriteLine(error);

}

}

// Usage:

Aggregator agg1 = new Aggregator(new FileLogger());

Aggregator agg2 = new Aggregator(new ConsoleLogger());

CQRS

CQRS is a Command, Query, Responsibility and Segregation design pattern.

CQRS (Command Query Responsibility Segregation) is a powerful architectural pattern that seems to cause quite a lot of confusion; it breaks from the norms most developers cling to by segregating the read and write operations with interfaces (check out SOLID to see how this improves performance, connections, and maintenance). With the popularity of micro-services and the event-based programming model, it is important to know what CQRS is. This is a potentially good option for working with large complicated databases.

First, let’s make sure we are in agreement on what CRUD (Create, Read, Update and Delete), the old CQRS competitor, is. When one thinks about this, CRUD is what most basic software systems rely on. A developer has some records, they may want to read some records, update the records, create a new record or delete some records. If one wants to build a system, a reasonable starting point would be using the same model for retrieving object as well as updating objects. As an example, assume you want to write a “Video Store Application”. You may have a VideoInventoryService that lets you do things such as add new videos to the inventory, mark some of the videos as loaned out, check if there is a specific video, etc. That would be a very simple CRUD system.

| Letter | Name | Description |

|---|---|---|

| C | Command | A Command is a method that performs an action. These would be like the Create, Update and Delete parts of a CRUD system. |

| Q | Query | A Query is a method that returns Data to the caller without modifying the records stored. This is the Read part of a CRUD system. |

| R | Responsibility | The Responsibility part here is about providing a responsibility for the Command part and and another responsibility for the Query part of the CQRS system. |

| S | Segregation | Segregation is the explicit separation of those Command and Query responsibilities improving performance and maintenance. |

In CQRS the goal is to segregate the responsibilities for executing commands and queries. This simply means that in a CQRS system, there would be no place for VideoInventoryService that is responsible for both queries and commands. You could have VideoInventoryReadService, VideoLendingReadService and maybe more. These services are broken down into separate responsibilities without concern for the other services. They will even run simultaneously meaning the micro-services don’t have to wait for a write or a read to finish. That is the job of the appropriate micro-service to finish it’s task while allowing others to continue.

From a desktop development world, or maybe even the monolithic services view, this does not sound like the most practical thing. And in some cases it may not be practical. How complex is your data source? Will breaking it down make development and maintenance easier?

Another aspect to keep in mind is that if the developers are employing an actual domain driven development practice then the CQRS system is made even more simple by only being concerned with it’s own specific domain. The purposes of the data driven micro-services become more simplified and the micro-services themselves no longer have to be concerned about details outside of their domain. Also, another nice thing about CQRS is there is no requirement for Event Sourcing. Commands are in effect streams of events that are persisted in the command part of the CQRS system. Queries only see and interpret these events once the data source is updated; no early peeks or corrupted data. In an event-driver distributed system like that seen in services or desktop applications there must be constant messages being passed around to keep changes orchestrated. Using the same domain model, or not separating these responsibilities, would be a mistake.

Finally, in summary, for most non-trivial systems it is not necessary to implement CQRS. In micro-services, the CRUD system is fine when the system’s purpose is very focused on a common task like instant messaging, creating a meme repository or maybe having a driving app. Adding complexity like a myriad of unrelated tasks, a complex multi-purpose database, or other trivial complications makes CRUD more difficult. The added complexity may end up detrimental to the system design. Plan carefully and be ready for the future.

Redis Cache

Redis, which stands for Remote Dictionary Server, is a fast, open-source, in-memory key-value data store for use as a database, cache, message broker, and queue.

What is Redis Cache?

Redis is an open-source distributed in-memory data store. We can use it as a distributed no-SQL database, as a distributed in-memory cache, or a pub/sub messaging engine. The most popular use case appears to be using it as a distributed in-memory caching engine. Redis supports a variety of data types including strings, hashes, lists, and ordered / unordered sets. Strings and hashes are the most common means for caching. There is even support for geo-spatial indexes where information can be stored by latitude and longitude. There is also a command line interface called redis-cli.exe with all the Redis features easily accessible to IT / admin. There are also a number of asynchronous versions of almost every method for accessing Redis.

Installing Redis On Windows 10

Get the MSI binary from here: https://github.com/microsoftarchive/redis/releases

Right now, as of 10/5/2020, we are using Redis-x64-3.0.504.msi. During installation all defaults are fine with one exception: always check "Add the Redis installation folder to the PATH environment variable".

Once Redis is installed there are some adjustments that may need to be made if the defaults are not sufficient. Specifically, if a password is required, the number of databases must change, a cluster is desired, TLS/SSL is needed or there is a port change then it will be necessary to edit the redis.windows-service.conf file found in the Redis installation folder:

- To change the port simply search for "port" and change the port number. The default is 6379.

- To change the number of databases search for "databases" and change the count. The default is 16.

- To change the password search for "requirepass" and remove the comment while setting a proper password. The default is none.

- To enable cluster search for "cluster-enabled" and start from there. The default is false.

Installing Redis Client in Visual Studio

There are various Redis clients available (https://redis.io/clients#c) but StackExchange.Redis is the C# client recommended by RedisLabs due to its high performance and common usage (https://docs.redislabs.com/latest/rs/references/client_references/client_csharp/).

For the project that will be using Redis, right-click and select "Manage Nuget Packages…". Search for and install "StackExchange.Redis". Alternatively, you can also use the Package Manager Console to run "Install-Package StackExchange.Redis".

The documentation for StackExchange.Redis can be found on github: https://stackexchange.github.io/StackExchange.Redis/

Accessing Redis Using StackExchange.Redis

A using statement is required to reference StackExchange.Redis

Using StackExchange.Redis;

To acquire access to the Redis server a ConnectionMultiplexer Connection is required:

ConnectionMultiplexer connect = ConnectionMultiplexer.Connect("hostname:port,password=password");

The ConnectionMultiplexer should not be created per operation. Create it once and reuse it.

With the connection to Redis one needs access to the Redis database where caching will take place:

IDatabase conn = muxer.GetDatabase();

The default database is 0. The default provides up to 16. The number can be changed in the config file. One idea might be to use a separate database per Tenant to keep things separated.

Configuration of StackExchange.Redis

As seen above, the ConnectionMultiplexer can be initialized with a parameter string. The many options can be found in the documentation (https://stackexchange.github.io/StackExchange.Redis/Configuration). Each option is simply separated by commas like this:

var conn = ConnectionMultiplexer.Connect("redis0:6380,redis1:6380,allowAdmin=true");

It starts with the main Redis server, then any cluster servers that the client should know about, and then other options follow. AllowAdmin isn't really recommended though it does expand on what the Redis client can do.

Another configuration option is to convert the parameter string into a ConfigurationOptions object:

ConfigurationOptions options = ConfigurationOptions.Parse(configString);

Or one can simply go straight to using the ConfigurationOptions object directly:

ConfigurationOptions config = new ConfigurationOptions("localhost")

{

KeepAlive = 180,

Password = "changeme"

};

Caching with StackExchange.Redis

Once you have a ConnectionMultiplexer you can then acquire access to a database. Any will do but database 0 is default. The database object provides the caching options. There are various means of caching in Redis but the simplest and most useful for TraQ7 was to use the String methods, StringSet and StringGet, like the following:

// Store and re-use ConnectionMultiplexer. Dispose only when no longer used.

ConnectionMultiplexer conn = ConnectionMultiplexer.Connect("localhost");

IDatabase db = conn.GetDatabase();

...

string value = "Some Value";

db.StringSet("key1", value);

...

string value = db.StringGet("key1");

...

Storing and retrieving a string value is easy and was the earliest primary function of Redis for caching.

Searching Redis Keys

Redis has KEY and SCAN keywords in the CLI for looking up database keys. KEY is typically not recommended in production, but SCAN is considered safe. The StackExchange.Redis client makes the decision on which Redis command to execute for best performance. KEY / SCAN supports glob-style pattern matching which is not the same as RegEx. Glob-style patterns look more like a Linux file search that includes the following patterns (use ' for escape):

- h?llo matches hello, hallo, hxllo, etc

- h*llo matches hllo, heeeeello, etc.

- h[ae]llo matches hello and hallo but not anything else like hillo

- h[^e]llo matches hallo, hbllo, etc but not hello

- h[a-c]llo matches hallo, hbllo and hcllo

To search for a specific key(s) you need a server object representing the host or maintenance Redis instance. Then a search based on pattern matching can be applied:

string pattern = "DataTable"

var server = conn.GetServer("localhost");

var cacheKeys = server.Keys(pattern:$"\*{pattern}\*");

Deleting Redis Keys

Keys can be deleted individually or as a whole set:

// Delete a single key

Database.KeyDelete("Key1");

// Delete all keys from database 0

server.FlushDatabase(0);

Serialization of Redis Values

StackExchange.Redis always changes all RedisValue being cached that is not a primitive datatype to a byte array. This is handled in TraQ7 Redis Caching to speed up the process by skipping calls to multiple methods. It is also necessary to specify a JSON setting to preserve all references to help prevent errors due to cycles in the objects being serialized.

readonly Encoding _encoding = Encoding.UTF8;

JsonSerializerSettings _jsonSettings = new JsonSerializerSettings() { PreserveReferencesHandling = PreserveReferencesHandling.Objects };

...

private byte[] Serialize(object item)

{

var type = item?.GetType();

var jsonString = JsonConvert.SerializeObject(item, type, _jsonSettings);

return _encoding.GetBytes(jsonString);

}

private T Deserialize\<T\>(byte[] serializedObject)

{

if (serializedObject == null || serializedObject.Length == 0)

return default(T);

var jsonString = _encoding.GetString(serializedObject);

return JsonConvert.DeserializeObject<T>(jsonString, _jsonSettings);

}

Notes:

- Make cache services into Singletons at Startup (helps Redis most)

- Convert serialized objects to byte arrays for faster transaction

- Use JSON setting PreserveReferencesHandling = PreserveReferencesHandling.Objects to enable serializing complex objects with circular references

- Redis Keys search pattern is NOT RegEx. Excluding whole words seem to not be possible.

- Clear the Redis cache when any changes are made to the structure of any classes that are serialized.

- Redis-cli.exe FLUSHDB

- Redis-cli.exe FLUSHALL

- These work inside the redis-cli client as well.

Integrating HTML5 Canvas In Jekyll

I was just wondering about integrating the HTML5 canvas into my Software Design blog like I did with JavaScript last week. Canvas is still basically JavaScript with a Canvas element.

Loading...

I could have also added the canvas into the page using Kramdown…

<canvas>Your browser does not support the HTML5 canvas tag.</canvas>

{: #canvas width="400" height="400" style="padding-left: 0; padding-right: 0; margin-left: auto; margin-right: auto; display: none;"}

I saw this clock as a demo a long time ago and I was happily able to reproduce it.

Integrating JavaScript In Jekyll

I was wondering about integrating JavaScript into my Software Design blog and Markdown clearly didn’t support HTML at this time… at least that was what I thought.

There are several ways to include JavaScript on demand using Jekyll.

- RAW HTML which looked messy but it does work

- Modify the header code generated by Jekyll to import JavaScript into the Post

- Modify the header code generated by Jekyll to use a script element

I rejected option 1, though I may use it one day, because it is just messy. Everything must be tightly aligned on the left of the text which is okay for something short but terrible for longer scripts.

I rejected option 2 because I seem to prefer having my javascript loaded rather than having it embedded into my page. In the end it isn’t much different than option 3.

I took the option of having Jekyll generate a separate script element to import multiple scripts from local or remote. I just add a property at the top of the post and my script is loaded and ready to run.

The js-list and css-list properties are now part of any page that needs to use a different JavaScript or CSS file.

---

layout: post

title: "Integrating JavaScript In Jekyll"

date: 2020-05-10 16:24:25 -0700

tags: javascript

js-list:

- "/assets/js/clock1.js"

css-list:

- "/assets/css/clock1.css"

---

The jekyll script I added to the custom header portion looks like this…

<script src="/assets/js/clock1.js"></script>

<link href="/assets/css/clock1.css" rel="stylesheet">

First Blog Post!

This is my very first blog post – ever! I’m very excited!

I’ve had several domains since around 2010. Two were supposed to be blog sites for my friend. The other two were just my name, https://matthewhanna.net and https://matthewhanna.me. I’m always focused on my clients so nothing really ever happened with those domains, other than for email.

I’m so rarely here, these are some things I need to remember about this blog site:

- Jekyll site

- This blog is using Kramdown for markdown

- It supports MathJax

- The free Font Awesome provides some great icons

- This blog uses both “category” (i.e. creates a folder name work, demo, etc) and “tag” (i.e. labels a post with C#, Java, algorithm, etc) so make good use of them

- The sass folder has many CSS classes provided already including notices and animations.

Let’s see this come to life!